In an earlier post made pre-election, we looked at how successful Donald Trump had been at using Facebook compared to Hillary Clinton. While the outcome of the election was and remains a shock to many, those looking at the clear signals from social media engagement were perhaps less surprised. With the help of EzyInsights Data Analyst Varpu Rantala, we took a deeper look at which news publishers have benefitted in the run up to the US Presidential Election 2016.

We looked first at this guide to US media across the Political spectrum, although we have not included every media on the chart:

We added alternative publishers to the left and right of the mainstream, including Mother Jones, Daily Kos, Conservative Tribune, Right Wing News and others. We can safely assume these lie even further to the left and right of the mainstream publishers in the diagram. So how did they do?

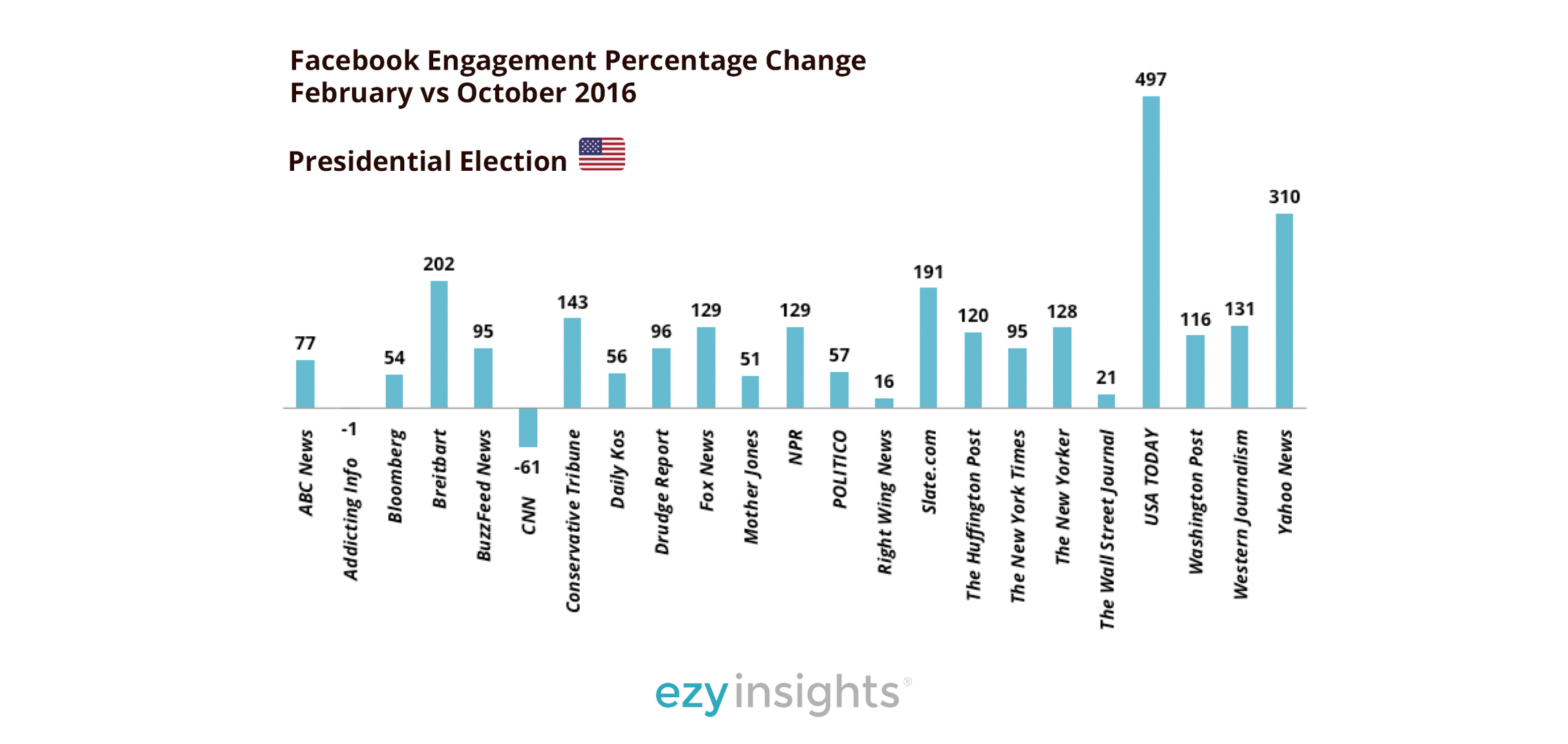

This graph shows increases in engagement from February vs October 2016 just before the election as percentages. The points to take from this are:

- The run up to the election benefitted most publishers greatly.

- CNN was the one clear loser, down 61% from earlier in the year.

- Breitbart, Western Journalism and Conservative tribune saw greater increases than their left leaning counterparts, Mother Jones, Daily Kos and Addicting Info.

- Yahoo News and USA Today, right in the middle of the spectrum, saw the biggest gains.

- Despite already being very large, Fox saw a significant percentage gain.

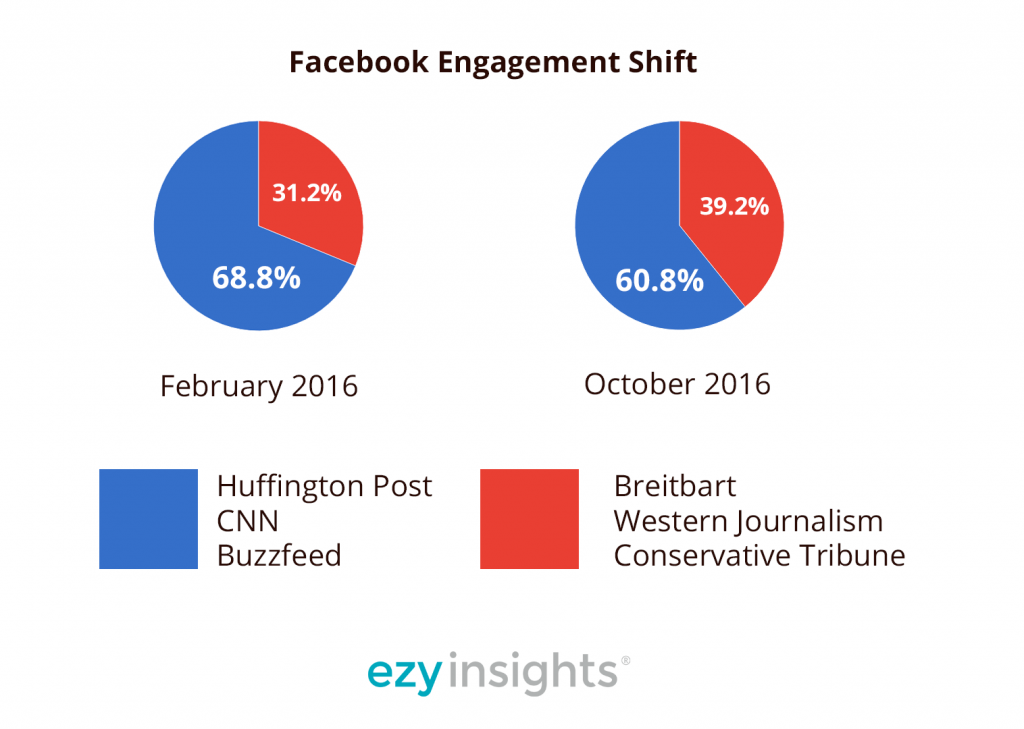

If we compare a selection of 3 typical left of centre mainstream pages with 3 from the conservative right, we see clearly that engagement on the right has increased comparatively:

This provides some evidence to suggest that Facebook engagement has increased more on the peripheries of political discourse, which is in-line with the idea that the platform’s algorithm encourages polarisation. Facebook is pushing people further apart ideologically, which sets off a chain reaction within individuals’ news feeds.

A study by the Finnish public broadcaster YLE saw that using a made up Facebook account, if one started by following a particular alternative ‘news’ website, actively engaging with everything they posted, the newsfeed algorithm would do its best to serve you more content like that. This lead deeper into the extreme, offering ‘pages to like’ from overtly racist and Neo-Nazi pages. This is both common sense and itself an extreme example of baiting the Facebook algorithm, but something similar is happening to people every day within their own Facebook feeds.

To a degree, Facebook decides the content you see from both sources and people you are friends with. The idea is to make it a comfortable experience for you. So someone ideologically on the Democrat side/left will see more content shared from those sources and by people they already agree with. What this does over time is neatly sort people into opposing groups, eliminating the middle ground, but importantly, hiding what the ‘other half’ are seeing. Filter bubbles are well documented, but what Facebook has accidentally created could be seen as different realities given how much people engage with the platform.

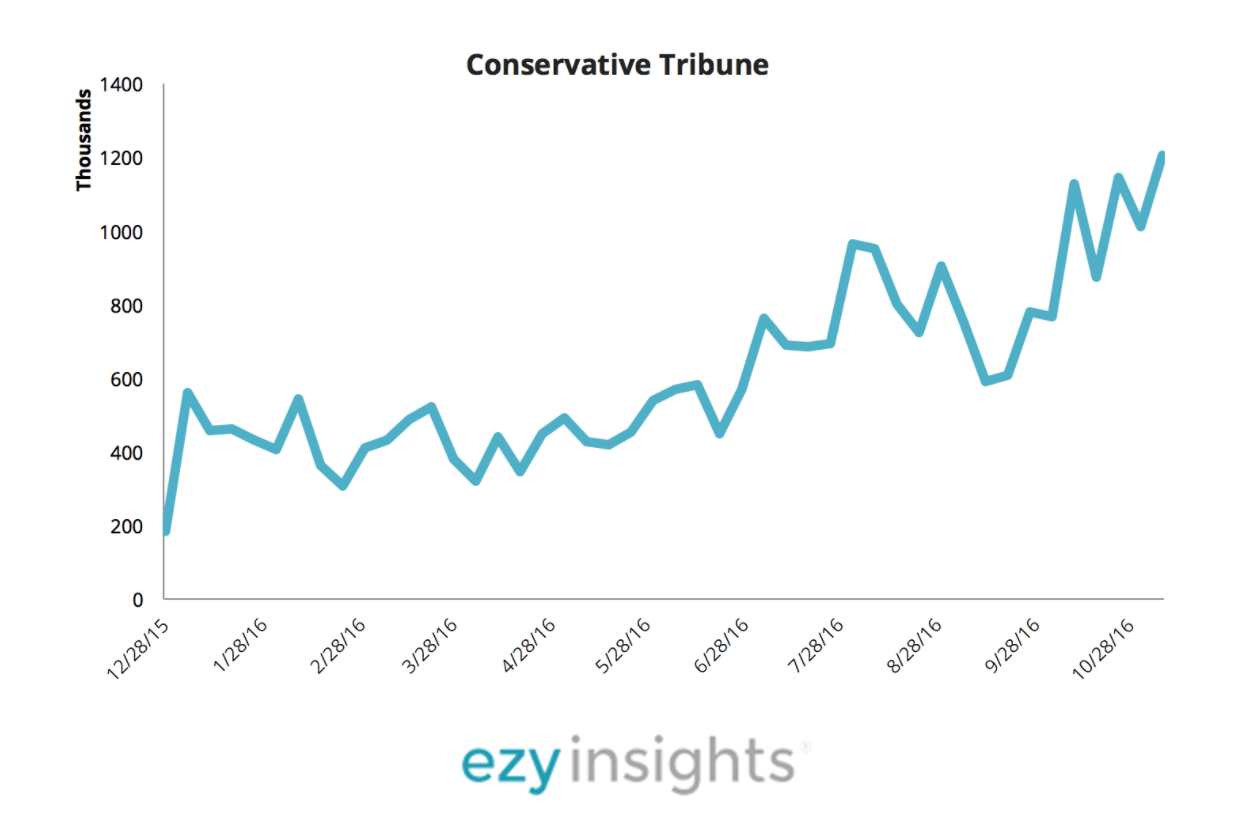

The page Conservative Tribune has seen steady and significant gains since the beginning of the year:

Conservative Tribune is rated as being in the “Extreme Right” category by MediaBiasFactCheck, with additional notes confirming that the site “Consistently fails fact checks, supports racism and promotes conspiracies.” Here’s a selection of the most popular recent articles also posted on Facebook:

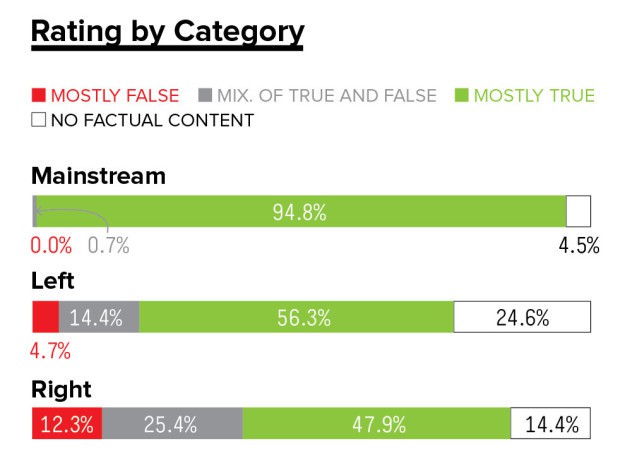

It’s extremely resource intensive to perform rigorous fact checking over a large enough sample of data. Buzzfeed News did have an attempt at analysing a few pages here. They found that a significantly higher percentage of news from certain websites on the right were either Mostly False or a mixture of True and False:

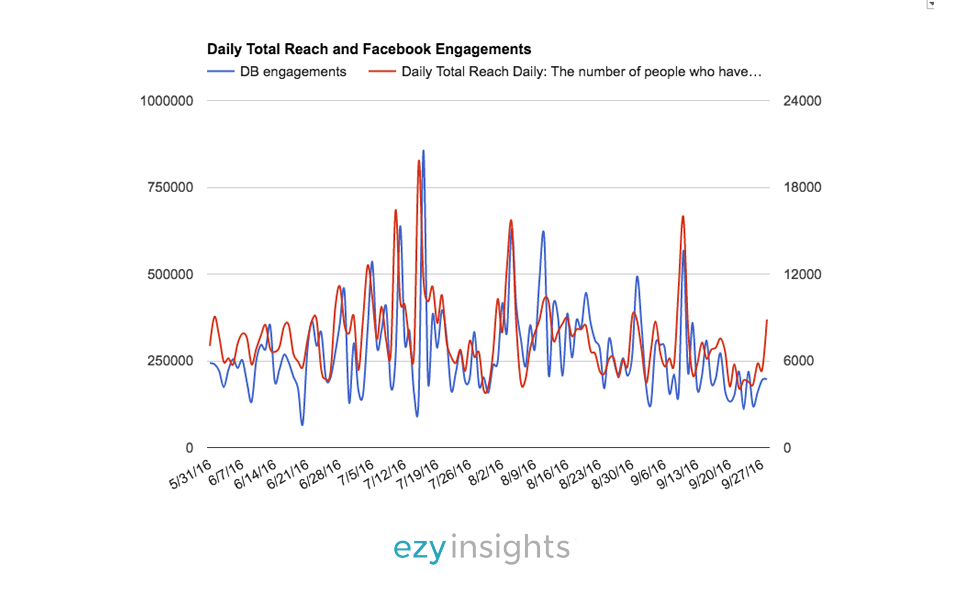

Mark Zuckerberg claims that only 1% of content on Facebook is fake news, yet there are two issues with that. First, 1% is an extremely high number considering the sheer amount of content posted to the platform daily. Second, it is clear that the sources that post fake or misleading news are getting a significant amount of engagement on Facebook. Engagement means reach, which means more people being exposed to misleading articles. The engagement to reach correlation is something we’ve also investigated previously at EzyInsights:

As you can see – Reach correlates very closely with Facebook engagement. If something is popular and generates likes, comments and shares, not only is it shown to more people, but consistent engagement on posts raises the reach level of the entire page.

We’re still unpicking data around the US election and beyond, but please contact us if you have any requests for data in this area.